iain's development activities. May contain z80, Cocoa, or whatever.

3 January 2024

On Zipping

In the last thrilling installment on mission creep I talked about how I had been distracted by a nearly finished Bandcamp collection downloader. Well, just to shave the yak further, I thought a nice feature would be to unzip the downloaded files into place.

My usecase for it is so I can download the music to my NAS so I have to download the zips to my local machine, unzip them manually and upload them by hand which is a pain.

So I thought, Apple has the Compression.framework to handle decompressing zip files from a buffered stream, so I could do it as the file downloads meaning I don’t need any intermediate storage.

I tried a few experiments but quickly realised that it’s not zip files that Compression.framework can decompress but zlib streams, that is, it handles the compressed data inside the zip file, but not the container format wrapped around the compressed data.

Took a look and found a load of Swift packages that can handle the container format, but none that could handle it in a buffered fashion and the only one that hinted that it might be possible - Zip Foundation - seemed to require a full file to be present so it could jump around a bit.

Why? Well, it seems that the Zip container format isn’t very condusive to streaming. It has an entry header that describes the data, but it might optionally put the size of the data in an extra section AFTER the data, which means working out how long the data will be is trickier. The format does have an archive catalogue which lists the offset for all these extra sections, so the way to handle it is to read that catalogue first and then parse all the extra sections. Except they decided to put this archive at the end of the file.

Which kinda sucks for trying to do the decompression on the fly.

Anyway, I spent Christmas break reading the Zip format specification and implemented a very very simple unarchiver that takes the async data stream from URLSession and unpacks it as it goes.

The twist is that the files from Bandcamp does appear to contain any compressed data so I don’t even need Compression.framework, it’s just a case of finding the data in the archive and writing it out to disk.

The code is nearly ready to go into Ohia and maybe it’ll be finished soon, cos I’ve got a ZX Spectrum Next I want to play with

3 December 2023

Mission Creep

Back in September I started talking about a new video mixing application I was writing called Cyclorama and then I stopped. Why? Well, I kind of got distracted. Bandcamp got sold to Songtradr and I wanted a way to get all my purchases downloaded from Bandcamp. I have about 1500 purchases on there, so it’s not something that could be done by hand.

There are scripts to do it, but they didn’t work reliably possibly due to the collection size, and they didn’t allow for incremental updates which I wanted because I wanted to be able to download only things that weren’t already downloaded.

So I started writing a SwiftUI based app to do it, but I don’t like using janky software even if it’s something I wrote for myself so I started work to “productise” it, with nice error messages, ability to log out, nice progress bars, all those things.

Then I found out that I can get track listings and URLs for streaming and now it’s growing and becoming more than a simple downloader.

The irony is it’s been able to download the collection for about a month and a half, but I still haven’t.

Anyway the code is at https://github.com/FalseVictories/Ohia if anyone wants to check it out. It needs an icon, but Bing Create doesn’t understand the difference between a macOS icon and an iOS one.

20 September 2023

Cyclorama

A few years ago when I was doing live gigs, I needed an app that could do simple projections for me and that I could manipulate quickly while on stage. I wrote a very basic application called Cyclorama in C# and Xamarin.Mac that allowed you to have two videos and crossfade between them, and have an optional static image as an overlay. It worked pretty well for what I needed but I felt it could be improved.

When it comes to projections and videos, my style is very much inspired by the experimental film maker and Godspeed You! Black Emperor projectionist, Karl Lemieux, who uses loops of 16mm footage and layers them on top of each other, and uses subtle manipulations and film burns to create striking scenes.

I’ve recreated this style in FCPX and DaVinci Resolve but neither of those are simple to use, or feasible in a live setting so I’ve decided to revisit my earlier application and rewrite it, this time in Swift and recreate this aesthetic rather than just crossfade between videos.

It can create some beautiful images. I’ll write some more in the future about what is happening.

22 April 2023

Been slowly working on Fieldwork while other things have been going on. Spent a lot of time watching SwiftUI videos so I could do SwiftUI “the right way” and I think I’ve figured it out.

I’m not convinced SwiftUI works well with the MVVM pattern that people want to push on it, at least not the standard way of thinking about MVVM. I see people creating ViewModel classes for each of their views then setting values on it in .onAppear or some similar View method, or the parent class creates a ViewModel class, populates it and then passes it to the View, but then that makes @State awkward because who really owns that object?

But it also doesn’t feel like it works well with the other way people seem to be using it, having one monolithic huge “ViewModel” object that gets passed into every SwiftUI view, because that’s not really a ViewModel, that’s just a global model that we would have worked with in the old C days.

Among other problems I’ve had with using the above patterns is that they make SwiftUI’s previews really, really awkward to develop.

One thing I read on Mastodon from someone that in SwiftUI the Views themselves are the “view model” and that makes more sense to me.

So I’ve rewritten a lot of Fieldwork to do away with ViewModels, and instead each class only gets passed in the simple types that it needs.

As an example the InfoView displays the name of the file, the length of the sample, the bitrate, number of channels, etc. Metadata type stuff. Previously it took a Recording class as a parameter, and did some digging through to find the right metadata: the name came from the Recording.metadata, the bitrate and number of frames from Recording.sample.bitrate etc.

This meant to create a preview for this, I needed to create a Recording for the preview, which meant setting up the RecordingService, the FileService and faking lots of things that the preview didn’t need to care about.

The preview doesn’t need to know about Recording class, it just wanted a few strings to display. It doesn’t even need to care if the bitrate is a number, it just wants a string to display.

So now the InfoView just takes 4 string parameters when it is created and it becomes its own ViewModel.

Swinging this back to the source of truth, the source of truth is at the highest level it needs to be. There is a large monolithic class at the very top holding things that views need to know about, but anything that’s needed further down the view heirarchy is just passed in as either a simple type parameter, or a binding to the simple type parameter. No view needs to know about the large monolithic class holding everything together.

And now Fieldwork is fairly easy to understand, and every view can be previewed fairly easily

15 February 2023

A change of direction, mostly because I’m disappointed my Sam Coupé platform scroller was a bust - I’ve returned to an application I was writing near the end of last year.

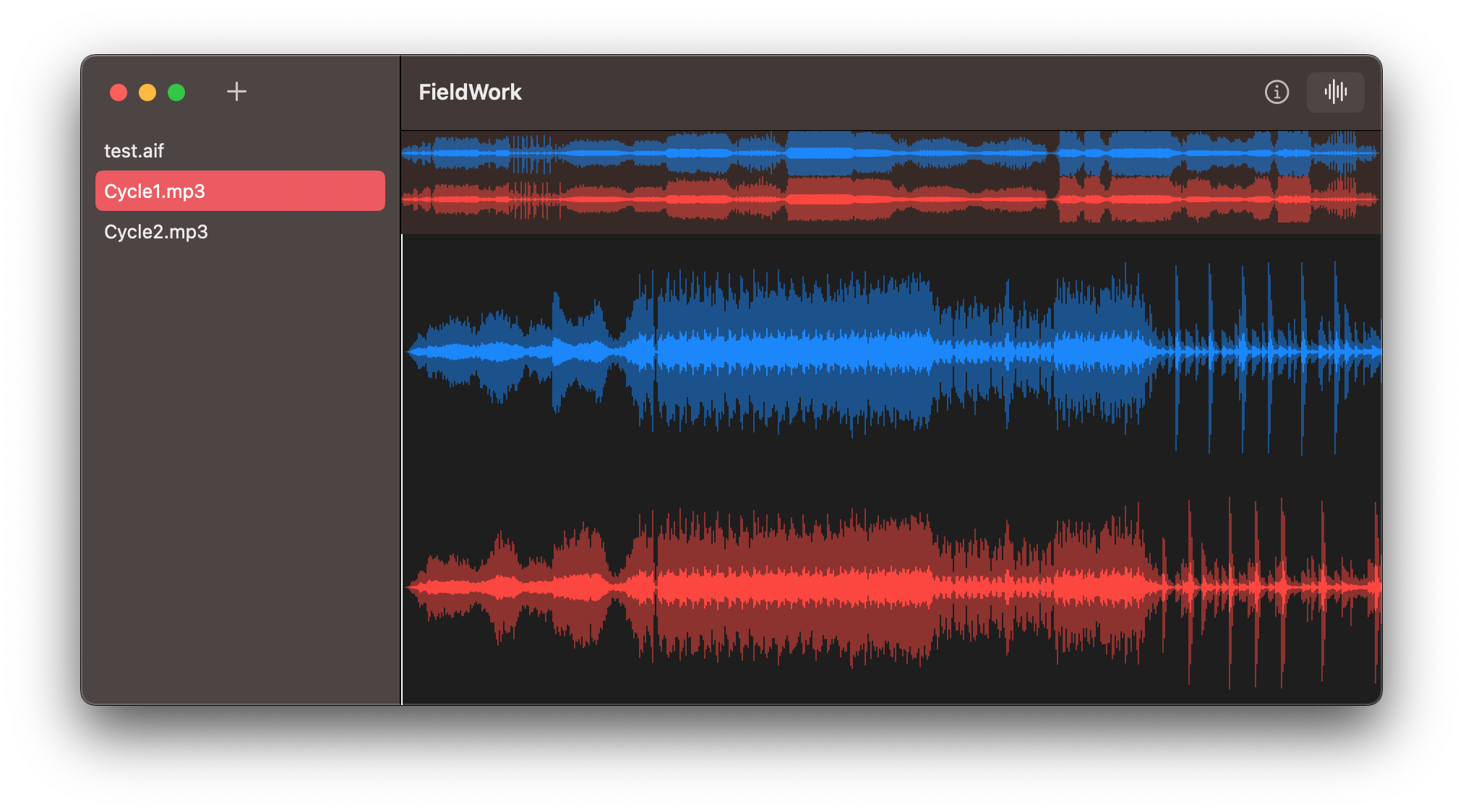

Fieldwork, a field recording organiser / editor. Think Lightroom for audio. It looks like this currently

The main window is SwiftUI, the editor display is AppKit, and it’s written in a mix of Swift, ObjC and C, although I’d like to reduce the amount of ObjC over time and leave it as a simple layer that Swift can call to access the sample data.

It’s using a lot of the code from my previous sample editor project Marlin. It can handle very large files, and operate on them very quickly, and at the moment I’m working out how to integrate it all with a SwiftUI declarative UI.

Plans in the future include writing a UIKit version of the editor display and see if it runs on iOS/iPadOS.